In our last blog, we took a look at cases demonstrating how steady data collection can be executed in areas with poor internet coverage. The NetXMS zoning feature was of key importance there, and it also comes in handy when connectivity is not an issue, but the amount of data is. Imagine the need to monitor thousands of network devices over SNMP, collecting hundreds of metrics from each device. Sounds challenging enough? Add to this checking the collected data for threshold violations and storing them for several weeks for quick access, as well as exporting them to an external database for analytics and visualization.

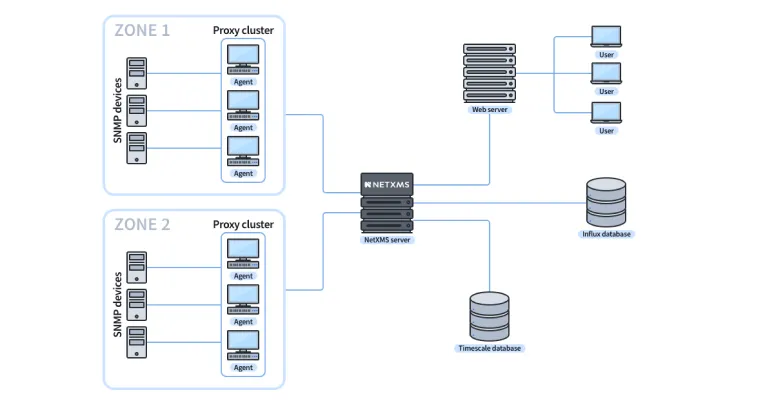

Zoning allows a network to be split into separate zones, with a server communicating with devices within each zone via proxy. Proxies solve the load balancing problem — they help deal with large flows of data by creating endless possibilities for the horizontal scaling-up of monitoring capability. With the zoning feature on, instead of polling SNMP-capable devices and servers in each location directly from a central monitoring server, the server uploads the data collection schedule to the proxy, which then does polling on behalf of the server. Proxies can be easily added or removed as needed to match the expansion or contraction of the network.

At the same time, zoning ensures high availability — if for some reason one proxy fails, other proxy agents will take over data collection and distribute it evenly among themselves. This way, failure of one proxy does not cause an overload and data collection is not interrupted even for a moment — which would be catastrophic for a system of such a scale. While doing their primary job, proxies also perform self-monitoring and report their status to the server so that data collection can be optimised quickly and effectively.

Another feature — the fan-out data driver — was used to feed the data into an external database, the InfluxDB, where they were used for analytics and visualisations.

A particular problem we faced was storage of the collected performance data. Traditional SQL databases (Oracle, PostgreSQL, Microsoft SQL Server and others) are not very effective when it comes to deletion of relatively small portions of data from huge tables. This is why, to improve performance and solve housekeeping issues, we used a specialised time series database — TimescaleDB — as a backend database.

The combination of NetXMS features together with a custom mix of databases allowed us to create a vigorously capable, flexible and elegant setup for such a large-scale operation.