How it all started

It was 8 AM when I settled into a cozy café above the service center where my car was being serviced. The spot had everything a remote worker could want: power outlets, comfortable couches, and decent Wi-Fi.

I figured I’d be able to finish a few tasks while waiting for my car. This year, the café had switched to a self-service model, and the process looked simple enough.

Around nine o’clock, the peaceful morning turned chaotic. A group of customers clustered near the coffee machine, which had been mysteriously switched off. Despite the fact that several people had already paid for their drinks, no one could figure out how to turn it back on.

The service center staff rushed in to help, but in their attempt to fix the problem, they somehow managed to crash the cash register system. Now, both the coffee machine and the register were out of commission.

And a call went out to the service center’s tech support.

No network connection — no coffee

An hour later, at ten, the tension broke in the most unexpected way. The cleaning lady, who had been quietly going about her job, walked up to the coffee machine, gave the power switch a quick flick, and calmly began cleaning it as though nothing had happened. It was hard not to laugh.

Yes, at that moment, I thought, it was obvious who really kept the place running.

By eleven, my caffeine craving hit. The cash register was still frozen, so I decided to take matters into my own hands.

I pulled up the café’s website on my laptop and found a troubleshooting guide for rebooting the POS terminal and register.

Following the instructions carefully, I managed to get to the point where I could select my long-awaited coffee and a chocolate bar. But when it came time to pay, the POS terminal threw an error: no network connection.

I sighed and submitted a ticket via the website (old habits die hard).

It looked, for a short while, like the situation had improved

Around noon, the delivery guy who restocks the café finally showed up. He power-cycled the register and POS terminal, then crouched down to fiddle with the router.

After a few tense moments, the network came back online, and everything seemed to work.

Success. I finally got my coffee.

But what struck me most was that without my explanation, the service guy might not have even checked if the payments were going through. He most likely would have rebooted the hardware and left, oblivious to whether the problem was actually resolved.

For a while, things ran smoothly. About half an hour later, another customer struggled with the payment system, and I ended up walking him through the process. Feeling like an honorary employee, I returned to my work.

Thirty minutes after that, I went to grab a cold drink… and there it was again: the network was down.

Ding! Another ticket filed.

By this time, the previous customer had returned from the service center, ready to pick up his car. But he couldn’t settle the bill because the payment system was offline.

The staff called tech support again, only to be told to “jot it down” and have the client pay the next time he came in.

Meanwhile, I counted at least seven people who had abandoned their purchases because of the ongoing network failures. The staff themselves eventually started pouring cups of coffee, fully planning to pay later when the system came back.

Solid network monitoring and management at the core of a great service. Anywhere.

Watching the situation unfold, I couldn’t help but think: this entire mess could have been prevented with NetXMS (I know, I’m a bit biased here, but bear with me).

With NetXMS deployed, the café’s systems wouldn’t have been left to chance:

-

Proactive monitoring: A NetXMS agent installed on the Windows-based register, or even simple SNMP monitoring of the router, would have immediately shown that the POS terminal and register had gone offline.

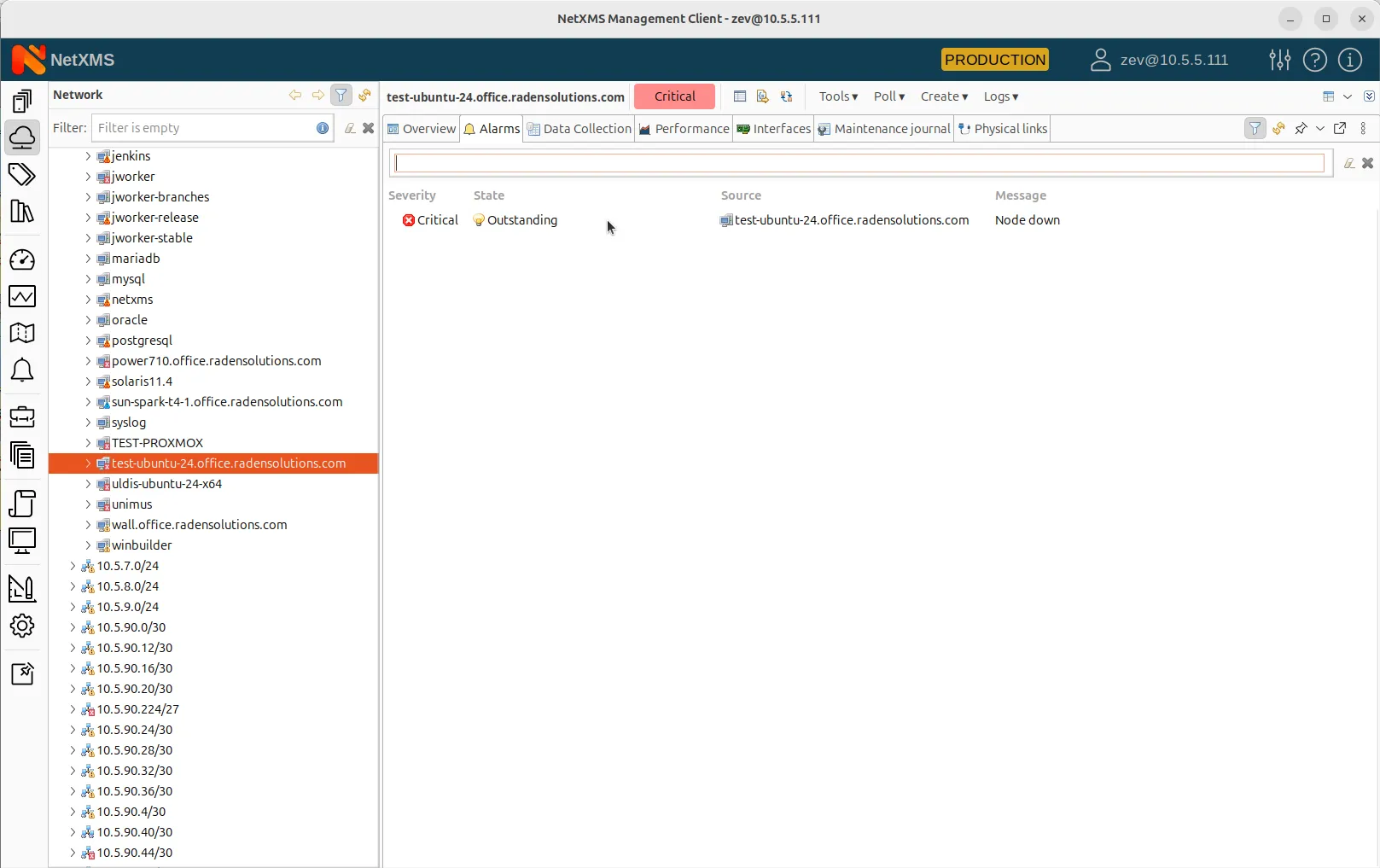

Critical alarm about the status of a node in NetXMS monitoring system -

Automated alerts and quick diagnosis: A simple ping check on the POS terminal would have flagged the network drop before any customer experienced a failed payment. The staff could have been notified instantly, avoiding frustration at the counter.

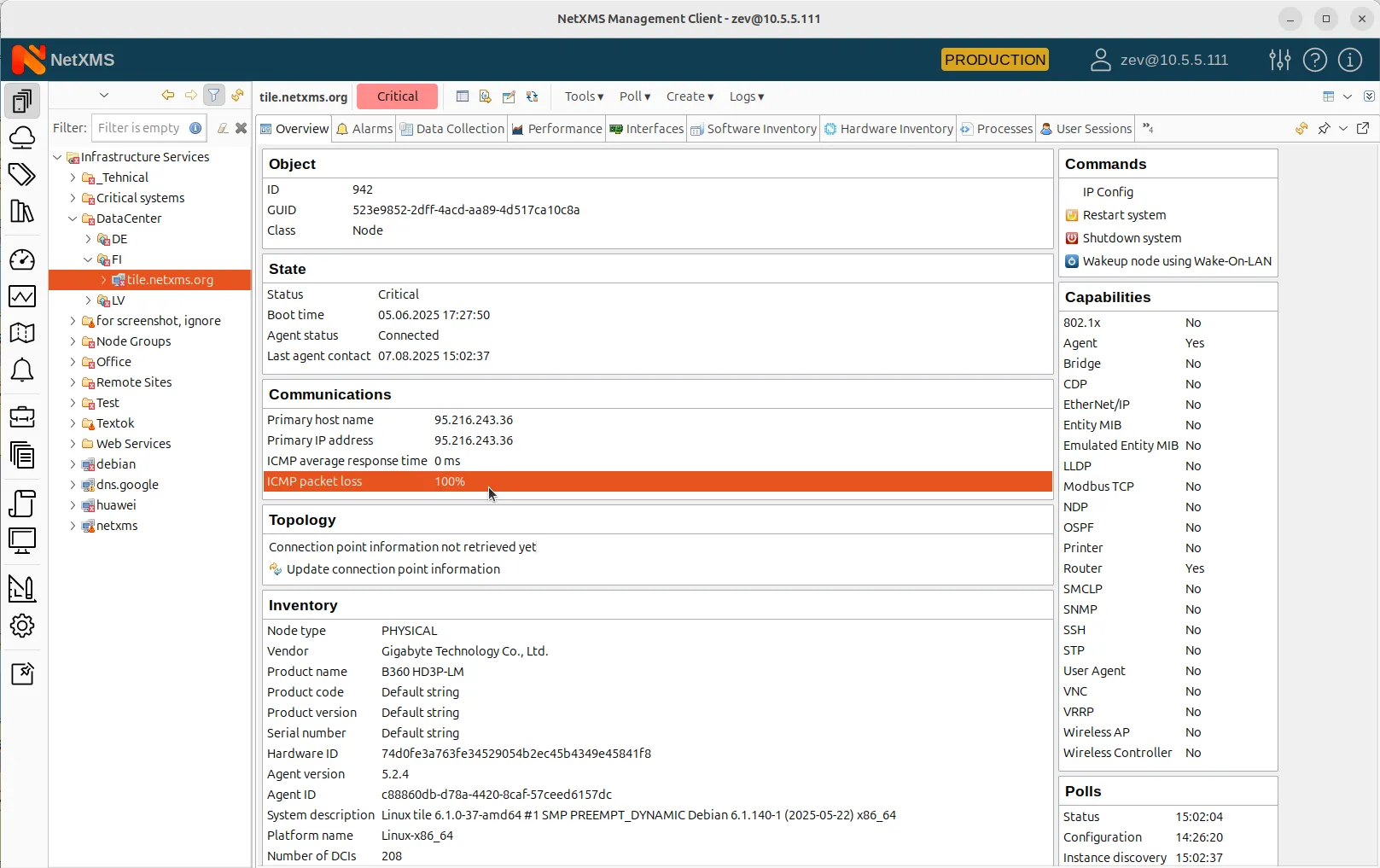

100% ICMP packet loss indicated in the Object Overview -

Remote remediation and remote visibility: With NetXMS, rebooting the register and POS terminal could have been done remotely. No waiting for a delivery guy to arrive. No downtime lasting hours.

This historical data would make it easy to pinpoint recurring problems and fix them at the root cause.

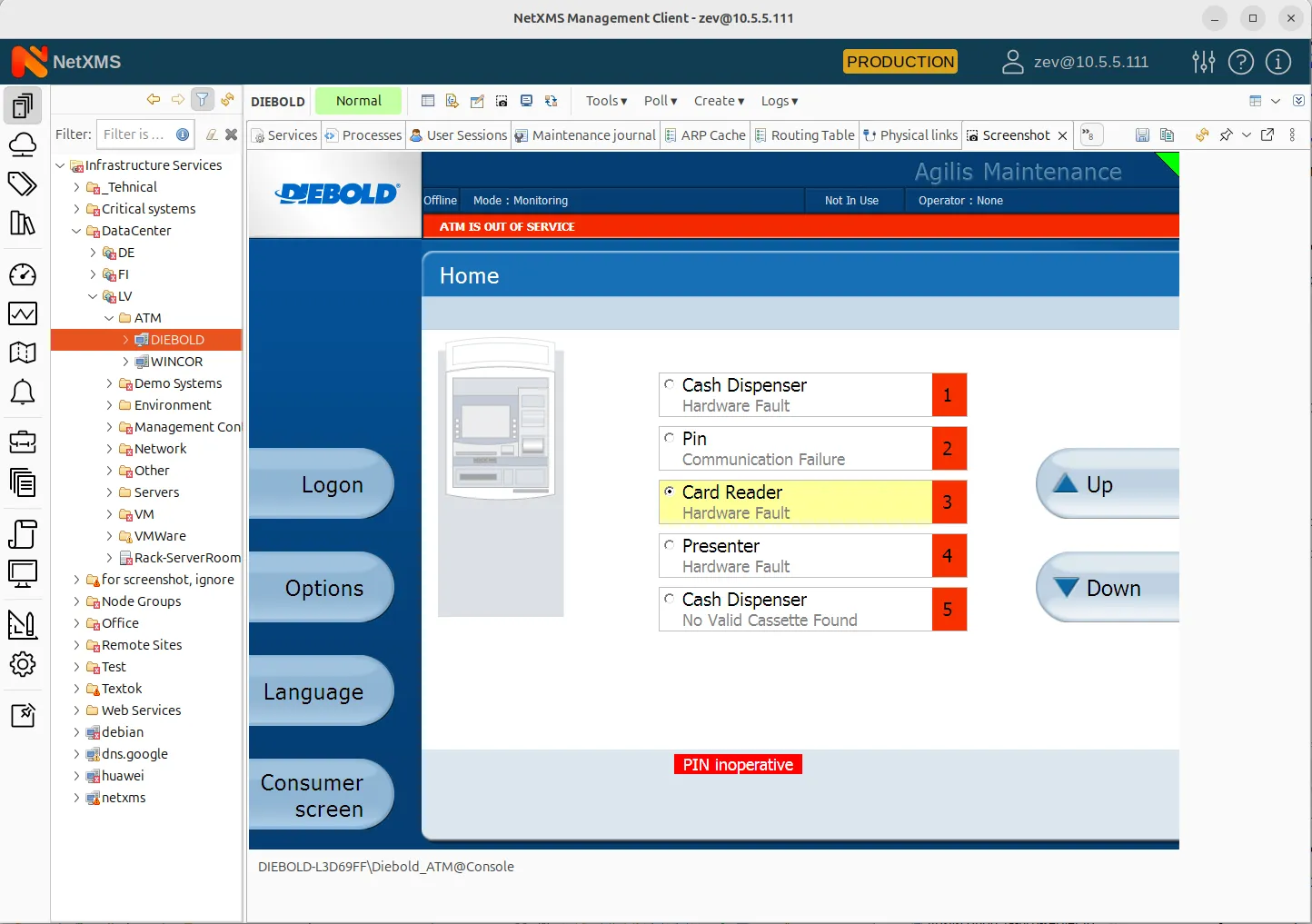

Screenshot made remotely with NetXMS system -

Offline data collection and historical analysis: Even in the event of a network outage, the NetXMS agent would continue collecting performance data locally, syncing it once the connection was restored.

This historical data would make it easy to pinpoint recurring problems and fix them at the root cause.

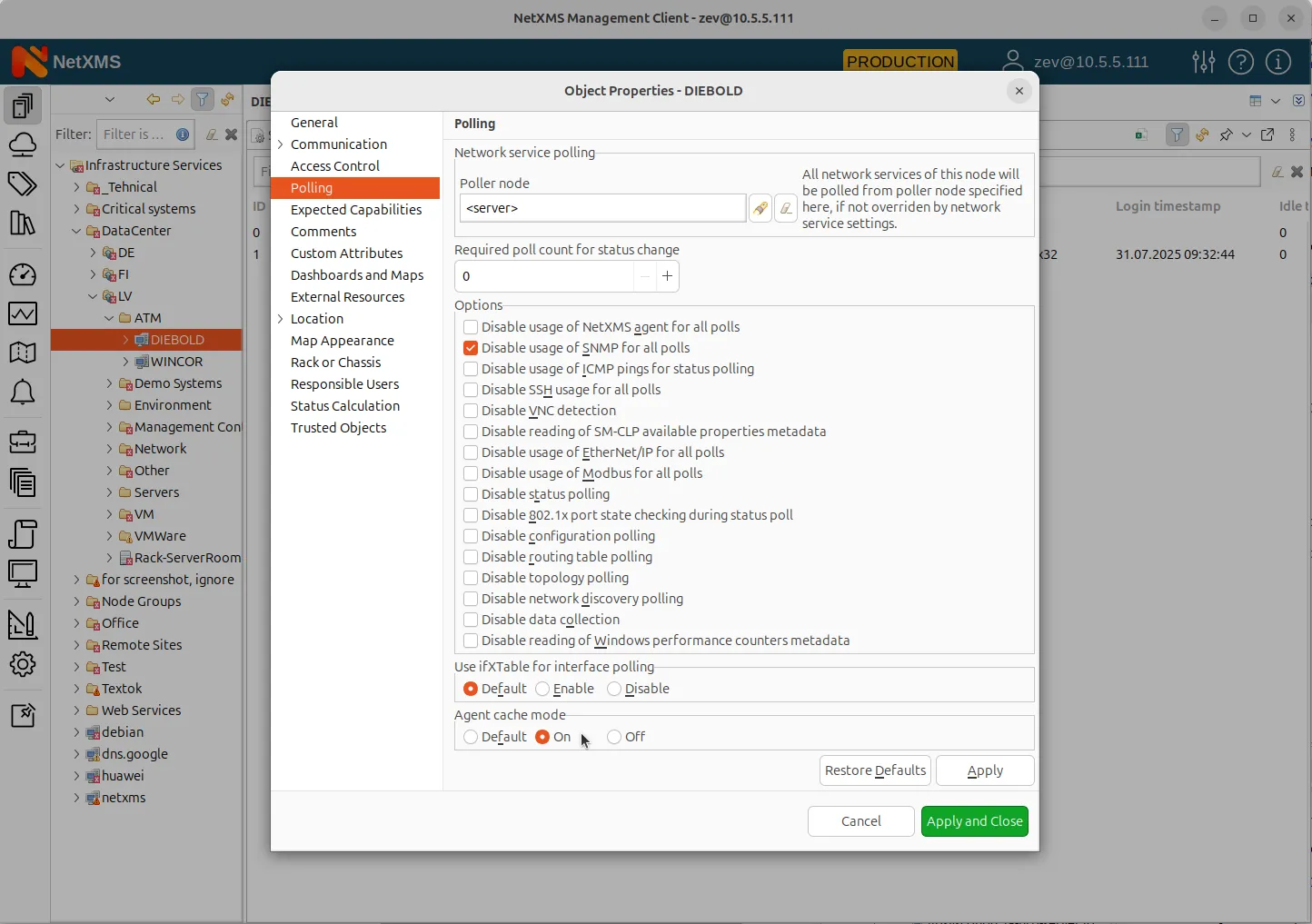

Node configuration of the agent cache mode that accumulates data on an agent while connection is interrupted

The real cost here wasn’t just lost coffee sales. It was the disruption of customer experience, wasted staff time, and the perception that the business couldn’t handle basic operations.

All of it could have been solved with a lightweight, open-source network monitoring system designed to keep business-critical devices online and accountable.

When you think about it, what’s more expensive: deploying a simple open-source NetXMS network monitoring and management setup (that now takes about 30 seconds) or repeatedly losing revenue, reputation, and customer trust every time the network hiccups?

With NetXMS, you don’t just monitor networks. You ensure that the smallest failures don’t snowball into hours of downtime and lost sales. You keep business continuity intact. Right down to making sure everyone gets their morning coffee without having to take it on credit.